From Gaming to AI: How GPUs are driving the Technology till Artificial Intelligence

Sam Altman said OpenAI’s inability to access enough graphics processing units (GPUs), the specialized computer chips used to run A.I. applications, is delaying OpenAI’s short-term plans and causing problems for developers using OpenAI’s services.

Till now we experienced the magic of AI and its potentiality but there is a core master power by which it can make it alive and running, that’s called GPU (Graphics Processing Units).

By the time I used the word GPU, Many games and heavy computer utilizing geeks can visualize one term NVIDIA RTX and GTX GPUs. From a long time NVIDIA was able to keep the monopoly of their GPU space by beating any other competitors coming in the way but still able to be the biggest market player in the hardware space powering the gaming and heavy power task playing roles.

After Sam’s comments, it’s evidently evident that artificial intelligence functioning relies heavily on graphics processing units.

So let’s understand how it drove and still driving many potential industries. The first question is

“What is GPU?”

In simple terms, A GPU, or Graphics Processing Unit, is a piece of hardware designed to manage and accelerate the creation of images and graphics for display on a device. It’s essentially a chip designed specifically for performing the complex calculations.

GPUs are particularly good at performing many calculations at once, a feature known as parallel processing. This makes them not only great for creating graphics, but also for other tasks that involve a lot of computations done simultaneously, such as training and running artificial intelligence algorithms.

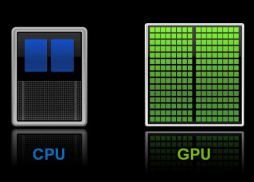

In a typical computer setup, the GPU is separate from the CPU (Central Processing Unit), which is the main processor of the computer handling most of the operations. While the CPU focuses on performing a few complex tasks very efficiently, the GPU takes on tasks that can be broken down into many smaller ones happening at the same time.

This unique capability of the GPU has made it a valuable resource in the field of AI, where handling massive amounts of data quickly and efficiently is often required to performing the complex calculations necessary for rendering images, making them especially important in video games and 3D applications where high-quality, fast graphics are crucial.

The primary function of a Graphics Processing Unit (GPU) in gaming is to render images, video, and animations quickly and efficiently.

When you play a video game, you’re essentially interacting with a complex, dynamic piece of software that requires real-time processing of large amounts of data to generate the images you see on your screen.

Here’s how the GPU fits into this process:

1. Rendering Images: The GPU takes the data about the game’s 3D geometry angles, textures, and lighting, and turns it into a 2D image to display on the screen. This process involves a lot of complex math and computations, which the GPU is designed to handle very efficiently.

2. Creating Smooth Motion : Video games often involve lots of moving elements and changing scenes. The GPU has to constantly update the display to reflect these changes, creating the illusion of smooth, continuous motion. This is why the speed of the GPU (measured in frames per second, or fps) is so important for a good gaming experience.

3. Generating Realistic Visual Effects: Modern games include a wide range of visual effects to make the gaming experience more immersive. These could include realistic lighting and shadows, particle effects (like fire or smoke), water reflections, and more. The GPU is responsible for calculating and rendering these effects in real time.

4. Handling Video and Animation: Besides static images, games also include video and animation sequences. These require the GPU to process and play back video data, as well as to calculate and render complex animations.

In all these ways, the GPU is crucial for enabling the high-quality, real-time graphics that gamers expect in modern video games.

It’s worth noting that while all these tasks are computationally demanding, the GPU is able to handle them efficiently because they can be broken down into many smaller tasks that can be performed simultaneously — a capability known as parallel processing. This is also what makes GPUs so useful in AI applications, but that’s a topic for another part of our discussion. 😃

But if it's created for heavy loads like gaming, when did it identified their way to enter other areas???

The potential of Graphics Processing Units (GPUs) beyond gaming began to emerge when researchers and developers recognized their ability to perform parallel processing very efficiently.

Let’s understand Parallel processing briefly…

Parallel processing is the ability to perform multiple calculations simultaneously. This is different from traditional CPUs (Central Processing Units), which excel at sequential processing — performing one task at a time, very quickly. While this works well for many computing tasks, it’s not as efficient for tasks that involve a lot of repetitive, parallelizable calculations.

In a gaming context, parallel processing is used to render multiple pixels on the screen simultaneously. Each pixel is calculated independently of the others, which means that these calculations can be distributed across the many cores of a GPU (modern GPUs can have thousands of cores, compared to a few cores on a typical CPU). This allows for fast, efficient rendering of graphics.

People noticed that this computing power could also be used in all kinds of calculation tasks that can be parted into multiple components. One prominent example is Artificial Intelligence (AI), in particular machine learning. Through this technology, it is possible to calculate numerous data at simultaneous speed.

Machine learning involves training models on large amounts of data, which requires performing many calculations simultaneously. For example, in a neural network (a common type of machine learning model), each neuron’s calculations can be performed independently of the others, making this a highly parallelizable task.

Because GPUs can perform these calculations more quickly and efficiently than CPUs, they significantly reduce the time and computational resources needed to train these models. This has made GPUs an essential tool for many AI applications, leading to their widespread adoption in this field.

The realization that GPUs might be utilized in this manner has resulted in substantial advances in AI, allowing for more complicated models and applications that were previously impossible due to computational limits.

This unanticipated use of GPUs has been a crucial driver in the AI industry’s recent rise.

Before we go deep how GPU’s powered AI, it’s good to know that GPUs are not just for games anymore. They are now used for many different tasks, like solving tough math problems, analyzing lots of data, and helping with AI. This is called General Purpose GPU use.

Why are GPUs good for AI?

It’s because they can do many things at the same time, which is really useful when working with a lot of data or doing lots of calculations quickly.

Now, let’s look at some examples:

1. Deep Learning: This is a type of AI that learns from lots of data. Google used GPUs to train an AI program called AlphaGo. The GPUs helped AlphaGo learn quickly and beat a world champion in the game Go.

2. Self-Driving Cars: Cars that drive themselves use GPUs to understand all the data they get from their sensors. This helps them make quick decisions. For example, Tesla uses GPUs in their self-driving cars.

3. Computer Vision/AR/VR: This is when AI is used to understand images. Companies like Facebook use GPUs to help their AI understand and recognize faces in photos.

4. Powering LLMs: AI programs that understand human language also use GPUs. One example is OpenAI’s GPT-3. It uses GPUs to process a lot of data quickly and understand language better.

So, in all these cases, GPUs help make AI faster and more powerful. They are a big reason why these AI projects have been so successful.

“So, how exactly do GPUs give AI these superpowers?”

Let’s explore.

Till now, we saw some real-world examples of how GPUs are being used in AI. Now, let’s understand why this matters and how it has expanded what AI can do.

Using GPUs in AI is like giving AI a boost. It allows AI to work faster, handle more data, and solve more complex problems. Here’s what that means:

1. Making Learning Faster: GPUs help AI learn more quickly. Imagine if you had to read an entire library of books. It would take a really long time if you read one book after another. But what if you could read all the books at the same time? That’s what GPUs allow AI to do. They let AI process lots of data at once, which makes learning much faster.

2. Working with More Data: GPUs also allow AI to handle bigger amounts of data. It’s like being able to see the whole picture instead of just a small piece. This means AI can make better decisions and predictions because it has more information to work with.

3. Solving More Complex Problems: With GPUs, AI can tackle more difficult problems. Imagine giving a math student a powerful calculator. They could solve much more complicated math problems than they could without it. The same goes for AI. With GPUs, AI can use more complex methods that can do more advanced tasks.

Because of these improvements, AI is showing up in more places and in more ways. It’s not just for tech companies or research labs anymore. Now, businesses, hospitals, schools, and even our homes are starting to use AI. And a lot of that is possible because of the power of GPUs.

Now, let’s take a peek into the future, powered by NVIDIA’s recent advancements in June 2023. Not only has NVIDIA continued to blaze the trail in the realm of AI, but it has also unveiled new developments that promise to push the boundaries of what’s possible.

Firstly, NVIDIA’s Computex 2023 keynote event was a smorgasbord of exciting news. The company’s Q1 profits soared, largely due to new AI hardware that’s turbocharging the latest large language models at Microsoft, Google, and OpenAI. More so, NVIDIA has gone big in the world of AI, unveiling a slew of products and platforms that revolve around AI and Generative AI. The NVIDIA GH200 Grace Hopper, a super-chip with incredible computing power, is set to bring major benefits to AI tools and services.

Secondly, NVIDIA’s ExaFLOPS Transformer Engine, a behemoth with 144 TB GPU memory, has become a game-changer. This goliath functions as a giant GPU, providing a building block for the Hopper architecture. Tech giants like Google Cloud, Meta, and Microsoft have already jumped on board, hinting at the transformative power of this innovation.

But NVIDIA isn’t just about AI. The company hasn’t forgotten the gaming community. The NVIDIA GeForce RTX 4080 Ti GPU is now in full production, showing NVIDIA’s commitment to gamers. Alongside this, the NVIDIA RTX 4060 and 4060 Ti models are set to launch soon, offering high-end graphics cards for gaming enthusiasts.

And then there’s Omniverse, NVIDIA’s full-stack cloud environment for developing, deploying, and managing industrial metaverse applications. Now hosted on Microsoft Azure, Omniverse allows every team involved in a product’s life cycle to use the same CAD data and the same digital twin environment. This enables unique capabilities like simulating factories and warehouses, training robots and AI models, and creating synthetic data sets for AI models and robotics.

Moreover, NVIDIA announced the availability of its Jetson Orin modules, offering eight times the performance compared to previous-generation Jetson Nanos. Companies like Mercedes-Benz and BMW are already using Omniverse in production to build state-of-the-art factories for their vehicles.

Lastly, NVIDIA’s Drive platform is being adopted by a diversified list of partners, including industry leaders like BMW and Mercedes-Benz and startups like Pony.ai and DeepRoute.ai. This wide adoption is enabling NVIDIA to innovate in the digital automotive space and beyond.

With all these recent developments, NVIDIA’s Omniverse has become a valuable tool for enterprises looking to streamline their operations and stay ahead of the curve in a rapidly evolving technological landscape.

These are just glimpses of the impact of GPUs on AI. The future holds even more exciting possibilities, with NVIDIA’s cutting-edge innovations leading the charge. It’s a thrilling time for the AI industry, and we can’t wait to see where GPUs will take us next.

So, what’s next for AI and GPUs? With NVIDIA’s latest developments, it’s clear we’re just getting started.

The future is bright and exciting.

GPUs are fueling AI to reach new heights, making our wildest tech dreams possible. Hold on tight, because in this fast-paced world of AI, powered by GPUs, we’re in for a thrilling journey.

Let’s look forward to what’s coming next!

Thanks for reading 🙂

In Plain English

Thank you for being a part of our community! Before you go:

- Be sure to clap and follow the writer! 👏

- You can find even more content at PlainEnglish.io 🚀

- Sign up for our free weekly newsletter. 🗞️

- Follow us on Twitter, LinkedIn, YouTube, and Discord.

From Gaming to AI: How GPUs are driving the Technology till Artificial Intelligence was originally published in Artificial Intelligence in Plain English on Medium, where people are continuing the conversation by highlighting and responding to this story.

https://ai.plainenglish.io/from-gaming-to-ai-how-gpus-are-driving-the-technology-till-artificial-intelligence-b5cc81759120?source=rss—-78d064101951—4

By: Adithya Thatipalli

Title: From Gaming to AI: How GPUs are driving the Technology till Artificial Intelligence

Sourced From: ai.plainenglish.io/from-gaming-to-ai-how-gpus-are-driving-the-technology-till-artificial-intelligence-b5cc81759120?source=rss—-78d064101951—4

Published Date: Tue, 06 Jun 2023 12:22:39 GMT