Transfer Learning in Deep Learning: Leveraging Pretrained Models for Improved Performance

Deep learning is a type of machine learning that involves neural networks training computers to perform complex tasks. Deep learning analyzes massive amounts of data, identifies patterns, and makes predictions, exhibiting significant implications for diverse industries, including autonomous driving, fraud detection, and medical diagnosis.

Transfer learning is a machine learning technique where a pre-trained model is used as a starting point for creating a fresh model, vastly reducing the data and computation needed for building a new model from scratch and saving time and resources while still producing high-quality results. Transfer learning also enables the application of AI and machine learning to domains with limited amounts of labeled data by leveraging the learned features of pre-trained models.

Deep learning and transfer learning have significant impacts on AI and machine learning models. They have practical applications in computer vision, natural language processing, and speech recognition, having enormous potential to transform our lives and work. This blog covers how transfer learning models use pre-trained models to yield better performance.

Summary

What are Pretrained Models

Pre-trained models refer to machine learning models trained on a large dataset for a specific task or problem. By saving the learned parameters and features, further operations can be performed without having to repeat training on the same dataset. Pre-trained models are popular in deep learning as they save significant computational resources and time by enabling transfer learning techniques. These models are built on massive datasets and use sophisticated architectures, performing well in many real-world applications. The pre-trained models can be fine-tuned or modified for different downstream tasks, such as object recognition, image classification, natural language processing, and speech recognition.

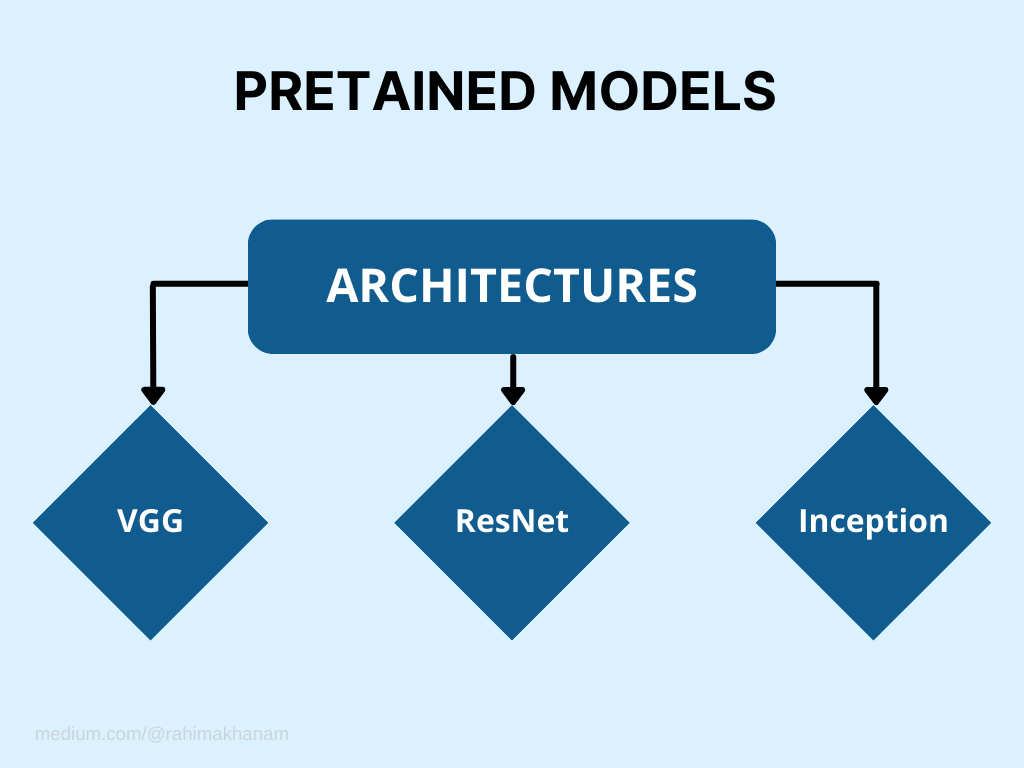

I. Architectures of various Pretained models

Various pre-trainer models like VGG, ResNet, and Inception are popular convolutional neural network architectures used extensively in the computer vision community for numerous operations, especially image classification. Their architecture is explained below.

- VGG (Visual Geometry Group) is a convolutional neural network architecture designed by the Visual Geometry Group at the University of Oxford. It has 16–19 layers and performs exceptionally well on image classification tasks due to its deep architecture. The VGG network uses a fixed size of 224 x 224 RGB input images, and the entire network is composed of only 3×3 convolutional layers and max pooling layers with different depths. The network ends with a fully connected layer of 1000 neurons for classification.

- ResNet (Residual Network) is a deep neural network architecture proposed by Microsoft Research. It solves the problem of the vanishing gradient in deep networks by using identity connections, also known as skip connections. These connections skip over one or more layers and connect the input directly to a later layer in the network. ResNet architecture comes in variants from ResNet-18 to ResNet-152 with varying layer numbers. ResNet-50 is the widely used architecture due to its efficient parameter use.

- Inception is a convolutional neural network architecture designed by Google Inc. It is also known as GoogleNet. The Inception network uses a combination of different-sized convolutional filters (1×1, 3×3, and 5×5) at the same level in the network and concatenates their outputs. The network also uses dimensionality reduction and pooling layers to reduce the number of parameters in the network. The Inception architecture starts with an initial 7×7 convolutional layer and ends with a fully connected layer.

II. Approaches of leveraging Pretained models

There are various approaches to leveraging pre-trained models, such as weight transfer and fine-tuning. These save time for training and improve performance in new tasks. These approaches explain below.

- Weight transfer is a technique used in deep learning where pre-trained weights from a model trained on a large dataset transfer to another model, which is then fine-tuned on a smaller dataset to perform a specific task. Fine-tuning involves adjusting the weights of the transferred model to fit the requirements of the new model and the new dataset. This approach is beneficial in situations with limited data for the addressed problem. By leveraging the knowledge already acquired by the pre-trained model, the fine-tuned model can achieve better performance with less training data and within a shorter training time.

- The fine-tuning approach involves modifying only the last few layers of the pre-trained model, which predict the output of the specific task. The earlier layers of the model, which detect general features, are mostly unchanged since they are helpful for the new model. By modifying only the last few layers, the fine-tuning approach reduces the risk of overfitting the proposed model to the limited data by preventing the weights from being adjusted too much.

What is Transfer Learning

Transfer learning is a machine learning technique that uses a pre-trained model (usually a neural network) as a starting point for a new model. The pre-trained model has typically been trained on a large dataset and learned general features being used to classify a wide range of data. The proposed model trains on a smaller, more specific dataset and the weights of the initial model are modified to adapt to the newly collected data. This approach can vastly reduce the data and computation needed for developing a new model from scratch. It can often lead to better performance than training the model from scratch.

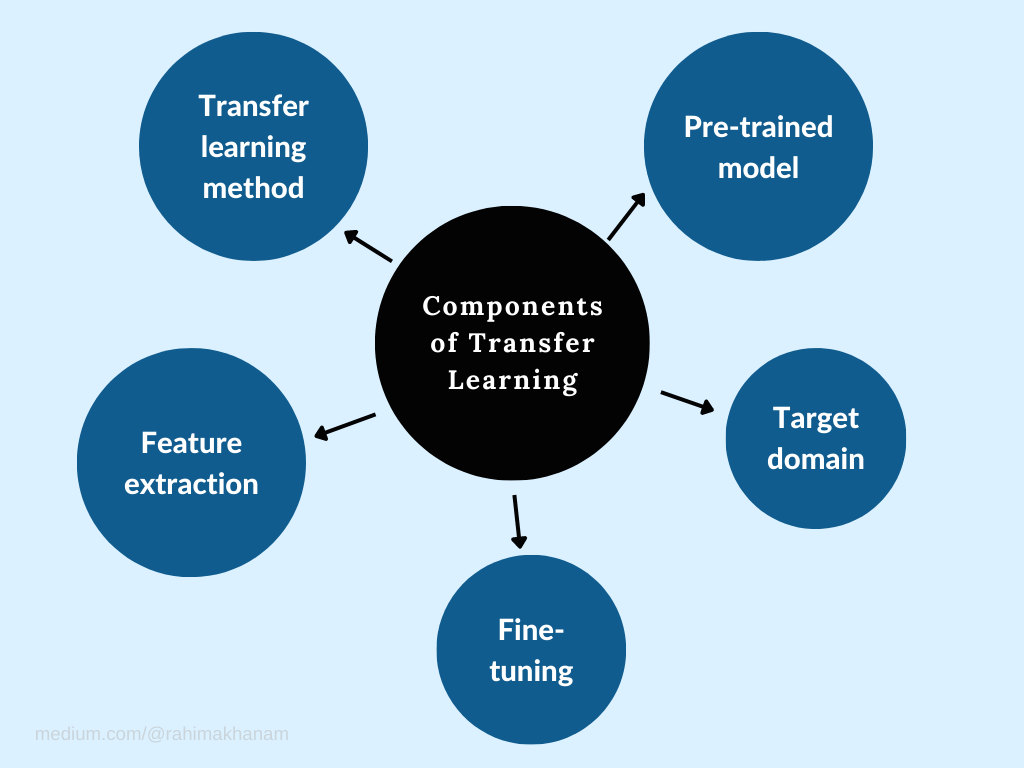

I. Components of Transfer Learning

Transfer learning includes:

- Pre-trained model: A pre-trained model is a deep learning algorithm trained on a large dataset for a specific task, such as object recognition or natural language processing. The pre-trained model develops for a particular domain or purpose.

- Target model: The target model is the model for which the pre-trained model is used. Depending on the model requirements, the target domain may be related to the pre-trained model or entirely different.

- Fine-tuning: Fine-tuning is adapting a pre-trained model to a new goal by changing its parameters. The model is tweaked for the newly developed domain to improve its performance.

- Feature extraction: Feature extraction is to identify relevant features from the pre-trained model and use them in a new domain. These features help generalize the model to a new model.

- Transfer learning method: There are different transfer learning methods, such as domain adaptation, multi-task learning, and learning with privileged information. These methods bridge the gap between the source and target domains.

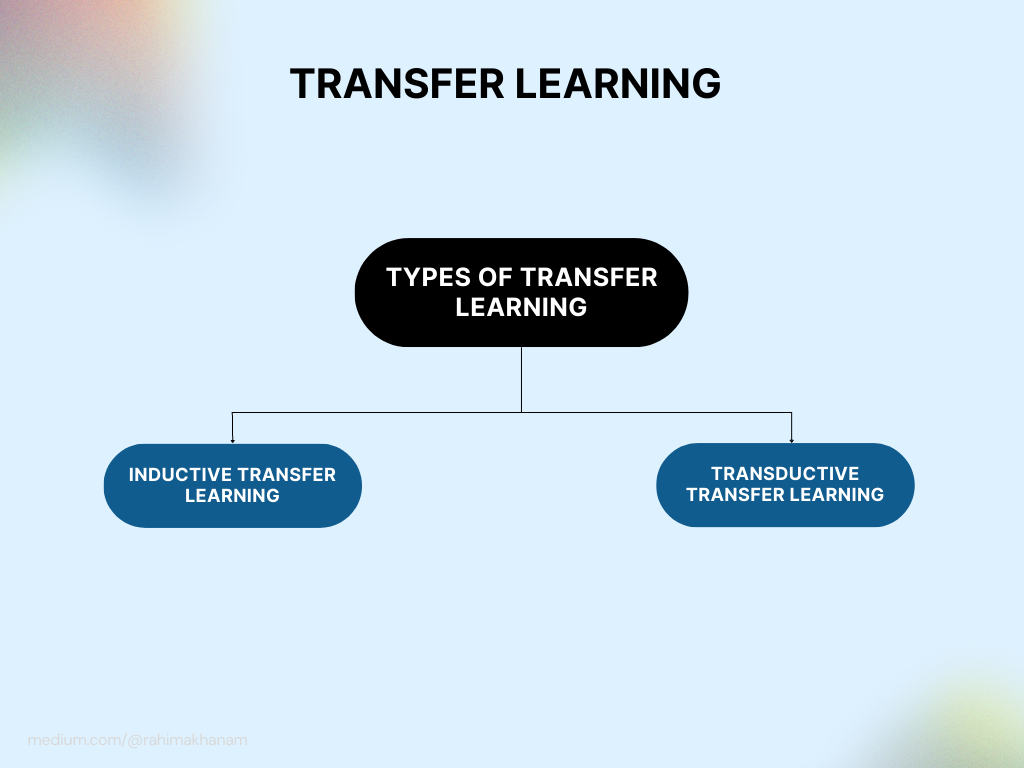

II. Types of Transfer Learning

Transfer Learning has two types:

- Inductive transfer learning: In this type of transfer learning, the knowledge learned from a source model improves the target model’s performance. The source and target models are different but share identical features or structures. The goal of inductive transfer learning is to leverage the knowledge learned from the source model and apply it to the target model to improve its accuracy. This type of transfer learning is used widely in computer vision and natural language processing tasks.

- Transductive transfer learning: In transductive transfer learning, the model trains to make predictions about new data points similar to the training data. The difference between inductive and transductive transfer learning is that in transductive transfer learning, the target model is the same as the source model, and the goal is to improve the performance on newly generated data points. Transductive transfer learning uses semi-supervised learning, where only a small amount of labeled data is available, and the model needs to be trained on a more extensive set of unlabeled data to improve its performance.

Applications of Transfer Learning

There are various applications of Transfer learning which are explained below:

I. Image processing

Transfer learning applies in Image processing in various applications, such as:

- Object detection: Transfer learning transfers knowledge from a pre-trained model to detect objects in a newly generated image.

- Image classification: Transfer learning classifies images by reusing a pre-trained model.

- Image segmentation: Transfer learning transfers knowledge from a pre-trained model to perform image segmentation, which involves dividing an image into different regions.

- Style transfer: Transfer learning transfers the style of one image to another by using a pre-trained model.

- Image enhancement: Transfer learning transfers knowledge from a pre-trained model to enhance image quality, such as denoising, deblurring, and super-resolution.

- Facial recognition: Transfer learning transfers facial features knowledge from a pre-trained model to identify and recognize faces in a newly created image.

- Medical image analysis: Transfer learning transfers knowledge from a pre-trained model to diagnose and analyze medical images, such as CT and MRI scans, to identify various diseases and conditions.

II.Natural language processing

Transfer learning has the potential to significantly improve natural language processing models’ performance even when training data is limited. They are used in the following ways:

- Sentiment Analysis: Transfer learning can improve sentiment analysis tasks by leveraging pre-trained models on large datasets in related domains.

- Named Entity Recognition (NER): Transfer learning can help improve NER by training on a pre-trained model on a related task and fine-tuning it using a smaller dataset in a specific domain.

- Text Classification: Transfer learning can be applied to improve text classification tasks, such as spam detection, by using pre-trained models and fine-tuning them with domain-specific data.

- Language Generation: Transfer learning generates coherent and grammatical sentences using pre-trained models.

- Question Answering Systems: Transfer learning can help question-answering systems learn domain-specific features. It can help reduce the amount of annotated data required for training.

- Machine Translation: Transfer learning can help machine translation systems generalize and improve translations by reusing pre-trained models for related tasks.

- Text Summarization: Transfer learning can help generate quality summaries by fine-tuning pre-trained models on domain-specific data, making it possible to generate meaningful summaries even with limited training data.

III.Speech recognition

Transfer learning is applied in speech recognition to improve model accuracy and reduce training time. Some famous applications include:

- Fine-tuning pre-trained models: Speech recognition often involves fine-tuning pre-trained models to the target model or language of interest. For example, a pre-trained model built on a large dataset of English audio recordings may not perform well on a specific task that involves recognizing accents or dialects. By fine-tuning the model on a smaller dataset of recordings in the desired dialect, the model adapts to perform better on the specific task.

- Feature extraction: Another common approach to transfer learning in speech recognition involves using pre-trained models to extract features from audio data, to apply as input to a separate model trained on a specific task or domain. For example, a pre-trained model designed to extract spectrogram features from audio data provides data to a speech recognition model trained to recognize spoken words or phrases in a specific language.

- Multi-task learning: Transfer learning trains models to perform multiple related tasks simultaneously. For example, a model trained to recognize speech in one language may be the starting point for preparing a model to identify speech in another language. As a result of sharing some or all parameters between the two models, transfer learning can improve the performance of both models.

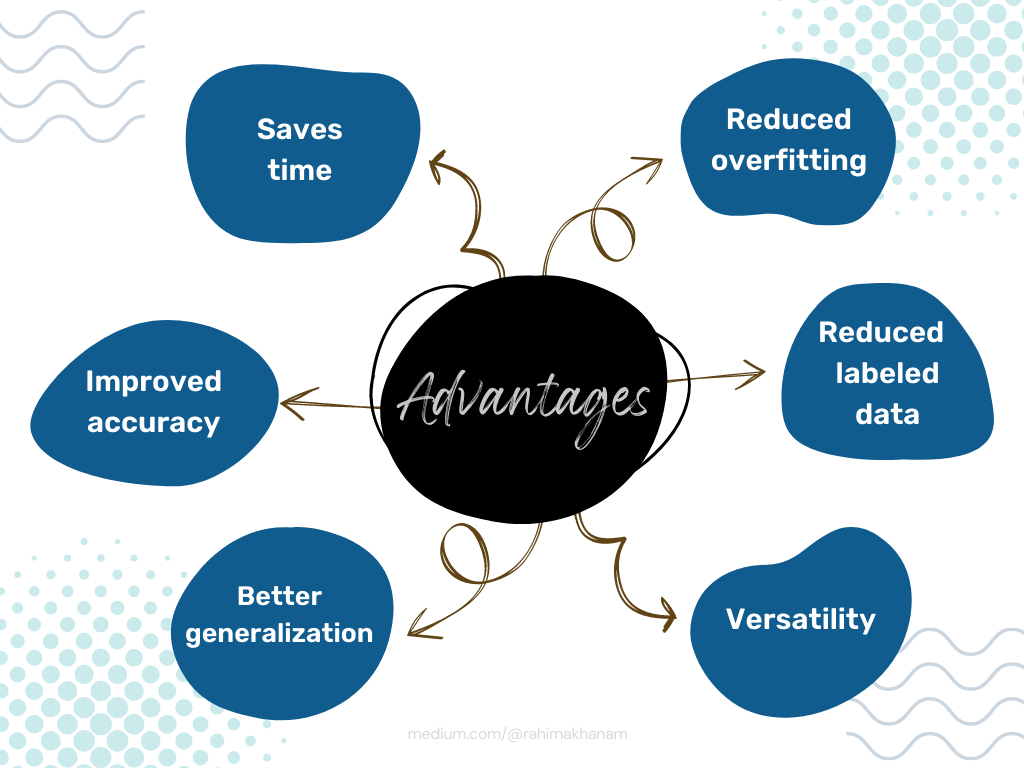

Advantages of Transfer Learning

Transfer learning is a machine learning technique where a pre-trained model works as a starting point for developing another model to solve a similar problem. Transfer learning has the following advantages:

- Saves time: Training a deep neural network from scratch requires a lot of labeled data, massive computational resources, and time. However, using a pre-trained model as a starting point can reduce the number of iterations required to achieve accurate results, saving time.

- Improved accuracy: Transfer learning can lead to improved accuracy on the newly initiated task since the pre-trained model has already learned some of the features and patterns in the data that can be helpful for the upcoming model.

- Better generalization: Transfer learning can lead to better generalization as the pre-trained model obtains using a large dataset similar to the new dataset.

- Reduced overfitting: Transfer learning can reduce the risk of overfitting since the pre-trained model has already learned relevant features useful for the new model.

- Reduced need for labeled data: Since the pre-trained model has already learned some features, transfer learning requires less labeled data, making it a crucial advantage in domains where labeled data is scarce or expensive.

- Versatility: Transfer learning is versatile since the pre-trained models can be reused in numerous tasks, making the technique highly valuable for applications that require multiple models.

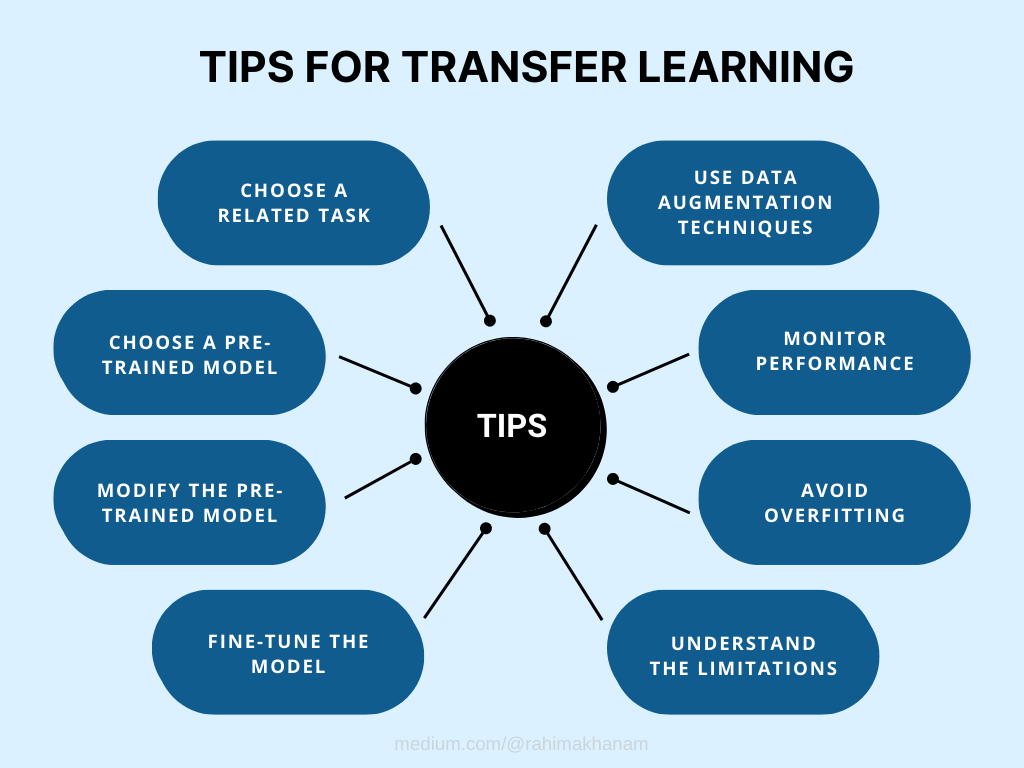

Tips for Transfer Learning

You can use transfer learning for your next project by following these tips:

- Choose a related task: Choose a model related to your current model. Similarities should exist so that the gained knowledge applies effectively to the current domain.

- Choose a pre-trained model: Look for pre-trained models available for the related model. These models have been built on vast data and can significantly reduce training time and resources.

- Modify the pre-trained model: Modify the pre-trained model to fit your specific task. You can adjust the number of layers, add/remove layers, and adjust the hyperparameters until you get the desired performance.

- Fine-tune the model: After modifying the pre-trained model, you can fine-tune it for your specific data. This process optimizes the model for your data and task.

- Use data augmentation techniques: Use data augmentation techniques to increase the amount of data available for training. These techniques can include flipping, rotating, or modifying images and text.

- Monitor performance: Monitor the model’s performance continuously to ensure improvement. Test the model on a validation set and adjust it accordingly.

- Avoid overfitting: Overfitting is a common problem with transfer learning. When training a transfer learning model, use regularization techniques to avoid overfitting.

- Understand the limitations: Transfer learning has limitations where it may not always work for all tasks. Understanding the drawbacks and deciding whether transfer learning is appropriate for your project is crucial.

Conclusion

Transfer learning is a technique used in machine learning to reuse a pre-trained model for a new task or domain rather than starting from scratch. Pre-trained models are neural networks that have already been built on a large dataset and fine-tuned for a different dataset or problem with less data and time. This approach improves the performance and efficiency of machine learning models, reduces the data and computation requirements for training, and enables pre-trained models to solve challenging tasks or problems with limited data. Transfer learning also helps to overcome the overfitting problem, reduces training time, and enhances the overall model performance.

However, transfer learning still faces some limitations, including the lack of a standardized evaluation methodology and the challenge of scaling transfer learning to large and complex datasets. Various creative approaches need examinations, such as meta-learning and few-shot learning. Additionally, transfer learning should extend outside traditional fields to include non-visual and non-linguistic domains. As part of future research and development, ethical considerations such as bias and fairness need careful consideration.

References

- Transfer Learning In NLP

- What Is Transfer Learning? Exploring the Popular Deep Learning Approach.

- Transfer Learning: The Highest Leverage Deep Learning Skill You Can Learn

More content at PlainEnglish.io.

Sign up for our free weekly newsletter. Follow us on Twitter, LinkedIn, YouTube, and Discord.

Transfer Learning in Deep Learning: Leveraging Pretrained Models for Improved Performance was originally published in Artificial Intelligence in Plain English on Medium, where people are continuing the conversation by highlighting and responding to this story.

https://ai.plainenglish.io/transfer-learning-in-deep-learning-leveraging-pretrained-models-for-improved-performance-b4c49f2cd644?source=rss—-78d064101951—4

By: Rahima Khanam

Title: Transfer Learning in Deep Learning: Leveraging Pretrained Models for Improved Performance

Sourced From: ai.plainenglish.io/transfer-learning-in-deep-learning-leveraging-pretrained-models-for-improved-performance-b4c49f2cd644?source=rss—-78d064101951—4

Published Date: Mon, 26 Jun 2023 04:17:19 GMT